It works!

My project is, at a basic level, functioning.

For now it’s called the Data Injector, but I’m trying to come up with a better name. Maybe by the time you’re done reading, you’ll have thought of one. If you do, let me know, I might very well use it!

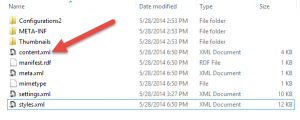

It starts with an .odt file. This is a fairly unassuming open document format, and one that both Microsoft Office and the open-source alternative LibreOffice can open, edit, and save to. If I say something about this document that doesn’t make sense, or if you’re just curious, I recommend my article on the subject, since it was written specifically in preparation for the one you’re reading now.

However, this is not just any .odt file. it has….input fields! Which are actually fairly boring. These don’t do anything interesting really, except subtly let you know they’re there, usually by highlighting themselves gray. Also they let you name them. They’re quite easy to put in. The really interesting part happens with what you put inside them, and what it lets you do to it.

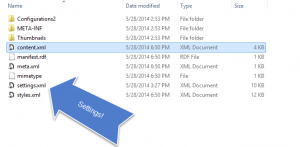

Remember when we took one of these .odt documents apart and put it back together? (Yeah, sorry I haven’t played with a .docx file yet. I’ve been busy. Hopefully soon you’ll see with what.) Well, there was that content.xml file. These input fields insert special tags that are named whatever you chose to name the input field. In our case, we named them “Template” so as to make it obvious what they’re for.

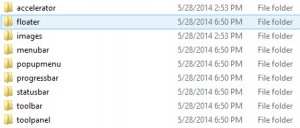

But that’s not all. It also has….sections and script tags! Which, like input fields, mark certain things in the xml. Sections divide the page into, well, sections; they’re kind of like paragraphs but with more visibly defined upper and lower edges. Script tags are like input fields except they actually don’t occupy any space in the document, they just show up as little gray rectangles that can go anywhere without moving anything else. They’re intended for containing computer code to be read and executed, and we’ll actually be using it for just that. As I’ll explain later, though, in our case they go inside the sections.

Microsoft developed a tool called Razor that can automatically detect and execute code formatted in a specific way in a document. The word “document” in the computer world generally refers to any file that is intended to be read rather than executed. We’re working with XML, which is a language used to describe things, and is formatted nicely in a way that is easy for computers to read. Thus, any XML file is also an XML document.

Razor is usually used to dynamically (read: whenever you need to, as opposed to once and then never changes) generate content in web pages. For this reason, it works very nicely with markup languages like HTML and XML, which are designed to contain and present that content. Since it works so well with XML, it’s not terribly difficult to use it to insert things into a normal XML document (like content.xml from the .odt file) instead of a web page.

There was already a library that took strings as templates and got its data by giving it a POCO. (Plain Old C# Object) Using the Razor parsing engine, it finds any Razor statements in the template and brings in the data from the model. (String is a term that means any information represented as text rather than in a way designed for the computer to understand it. It comes from stringing a bunch of characters together.)

I had a library, and I had a template. Models I could make on my own, at least for testing purposes. Most of my problems came from either not being familiar with Razor (I’m getting there, little by little) or from getting the components or services to work together. Since Razor relies heavily on @ signs, and we needed to be able to have them in the document without Razor trying to parse them. This is where things like input fields, script tags, and sections come in handy.

Loading up the XML file and being able to change it was fairly easy. Correctly transforming it into what we needed was harder, but doable. Control-flow statements (where sometimes we want to do the same thing multiple times with different information, or decide whether to display one piece of data based on the value of a different one) were more… interesting.

Regular insertion of information just uses input fields, which are easy to find and process. These control flow statements are in script tags that are actually inside the section tags they control. This presents an interesting problem: How to execute statements that affect a block of XML that actually contains those statements? The answer was actually pretty simple but required some knowledge I didn’t have at the time about how Razor worked. The solution was to modify the xml block that contained not only the script but the entire section, so that it was all inside the Razor statement (which was removed from inside the script tag and placed so that it surrounded the section).

For anyone I lost somewhere in there, it looked like this:

<main document>

<section>

<script>

Razor expression ( )

<end script>

<end section>

<rest of document>

And afterward it looked like this:

<main document>

Razor expression (

<section>

<end section>

) [This marks the end of the Razor expression]

<rest of document>

which is pretty neat.

So after writing tests to ensure it was working as intended, version 1.0 was done. It still needs internal revision and better testing, both of which I’m working on. It also has a large amount of expansion coming, and will eventually hopefully be a lot more interesting than what it does now. But it already does a small part of the things it is supposed to eventually do, and it does so decently well.

Speaking of which, I’ve explained to you how this works, but not directly shown you what it does. How about a little show for our show and tell?

(Disclaimer as before: These images probably look really fuzzy embedded in my post. Click on them with your middle mouse button/mouse wheel and they will open in a new tab, with much better quality. You won’t lose your spot on the post.)

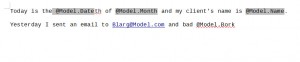

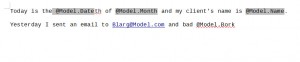

This is what my template document looks like before running through my Data Injector. Note the gray parts; those are input fields, and will be detected, interpreted, and filled in. The second line contains things that might normally be executed as Razor statements, but because of computer magic the library knows only to interpret things inside the input fields as Razor.

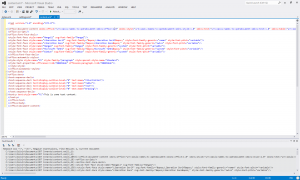

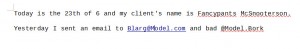

After running the template through the program, with the appropriate model, it looks like this:

(First rule of programming: the computer will do what you tell it to, regardless of whether that was what you actually wanted it to do)

While the template and model here could use a bit of polishing, the program itself worked correctly. There are plenty of applications for this sort of thing, including quite a few that I never would have considered. How many can you think of, and what do you think is a better name for it than the rather bland Data Injector? Let me know in the comments. Comments are good.

Hope you enjoyed this, and I’ll see (read?) you next time. (Which will hopefully be closer to the difference between my first two posts rather than between this one and the previous one)